There are about a million things you could do to improve your website to gain and convert more visitors. These things are different for each website. But there is one simple, objective thing that you can do on any website that will please your visitors, improve your conversion rate and improve your search engine rankings.

Do you want to know what it is? You guessed it, it’s page speed. In other words, page load time; the time it takes for a page to completely load in a visitor’s browser.

How important is page speed?

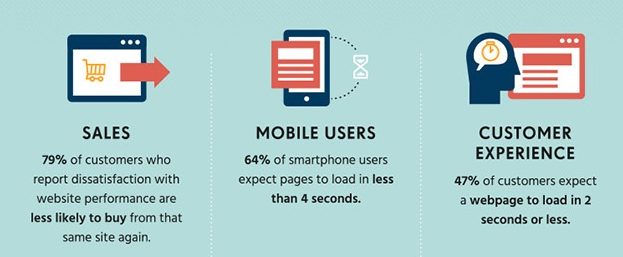

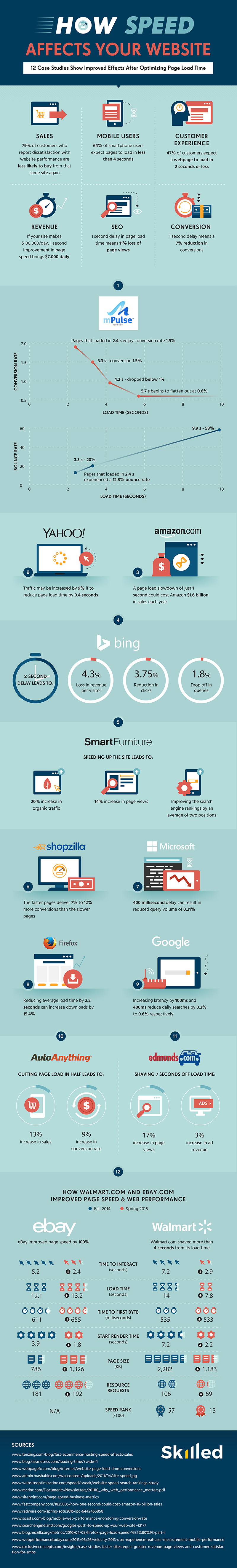

Research has shown that page speed has a massive impact on how long your visitors stay on your website, how many pages they read and how well they convert.

A study by Google showed that 53% of people will leave a mobile page if it takes longer than 3 seconds to load. Other research suggests that a delay in load time of just one second can leave you with a 7% reduction in conversions.

Check out the full infographic at the bottom of this post for more information.

Nobody likes a slow website, and this is especially true for mobile users. What’s more, Google takes page load time into account when ranking your website in the search engine.

So it’s clear that improving your website speed is a win win situation no matter how you look at it.

How to measure your website speed?

Measuring website speed can be difficult because the time it takes for your pages to load can differ widely between visitors.

If your website is hosted in New York and your visitor is from China, they will experience a different page load time than a visitor from a closer location.

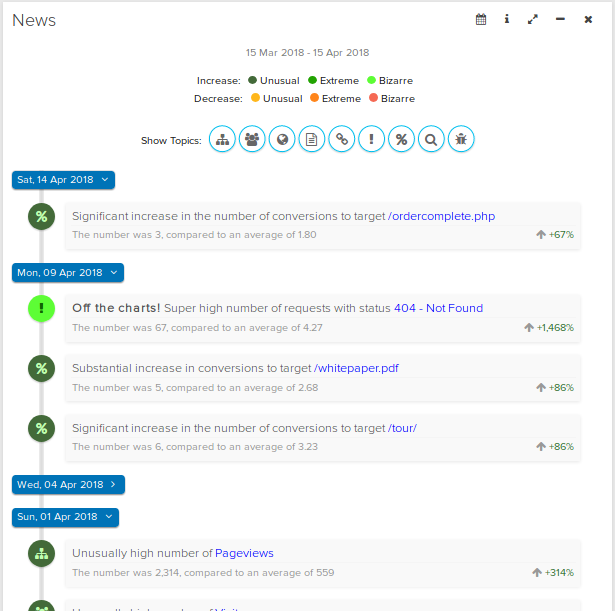

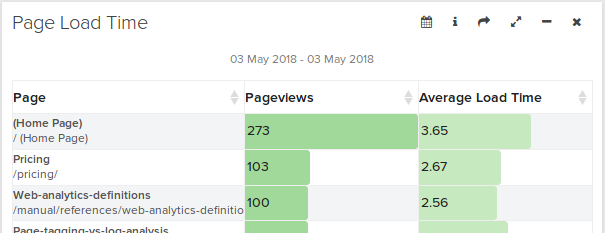

Logaholic’s new Page Load Time report measures your website speed for any visitor from any location whenever it can.

This gives you a representative sample of your average page speed for each and every page on your website across your entire audience.

Of course you can use Logaholic’s segmentation filters to view your page load times per country, per device or per marketing segment.

How to improve page speed?

So now that we have an idea of how long it’s taking our visitors to load up our pages, it’s time to see how we can improve those page load times.

Luckily there are some great online tools that can help us do this. The free Pingdom website speed test tool is a good place to start. Also, Google’s Page Speed Insights offers a great way to analyse your page speed and guides you through all the steps needed to improve your page load times.

There are many things you can do, like minify your JavaScript and CSS files, optimise your images, enable compression on your web server and many more.

Simply applying the tips these tools have to offer, will allow you to greatly reduce your page load time.

It might be a little work to implement all the optimisations but take a look at the infographic below and you’ll see why is worth the effort.

Infographic bySkilled.co

admin, May 4, 2018

admin, May 4, 2018